Our last TENGU platform update focused on enabling data science and ML pipeline orchestration. Since then, we’ve been working hard towards the next step: further enablement of data analytics orchestration. We're taking our platform to the next level with automation, self-service, and monitoring capabilities for all data roles.

Let’s look at the update and how data analysts can use the TENGU platform to set up their data flows.

Contents

- Integrations

- Pipeline setup

- Data collection

- Pipeline run automation

- Monitoring data flows

- Future updates

Easily integrate your data sources as graph elements.

Picture this: you want to use a new data source for your analysis, but this takes time because you’re dependent on the data engineer or IT team. You need their technical skills, and this takes up precious time.

To make you independent and save time, we’ve made data integration easy through automation, with no technical knowledge needed. So you can integrate your data analytics data yourself and get to work on it right away.

💡 Elements are representations of data resources of different kinds and deployed automatically in the graph view.

Next to the already existing supported API data sources, we’ve added further support for essential analytics data sources. This way, you can integrate sources such as spreadsheets with a couple of clicks, while the TENGU platform automates the rest.

(Pictured: A group consisting of a Google Sheets element with multiple tab elements and a group consisting of a Google Analytics element with various data elements.)

This way, data analysts can integrate data sources themselves, which means less pressure on all teams, and get to work on the data faster, leading to faster results. And voila, the data is ready to use. Moreover, you can get to work with the required information right away, using the TENGU platform itself.

💡 Want to know more about overcoming data accessibility roadblocks?

Read more here: BLOG: How to overcome 3 data accessibility roadblocks for business analytics.

Set up your data pipelines with no code.

Next up, you want to connect these data sources to whatever data repository you prefer. Usually, you’d need to use either too complex or technical tools or one that’s not flexible enough.

💡 A data repository is a place that holds data, makes data available to use, and organizes data in a logical manner. (Source)

That’s why we’ve added the pipeline elements to extract and load data. You can now easily and quickly set up data pipeline elements with a couple of clicks, but with the added flexibility of being able to specify specific extraction and loading points. We use a Dagster instance to support this, one of the new data orchestrators for ML, analytics, and EL (more info here: dagster.io).

💡 A data orchestrator is a tool that takes siloed data from multiple data storage locations, combines it, and makes it available to data analysis tools. (Source)

(Pictured: Pipeline element supported by a Dagster element, extracting data from a Google Sheet element into an ArangoDB database.)

No more time wasted on using yet another complex program to manually extract and load data, thanks to the Dagster instance. Instead, making sure your data is where you want it to be when you want it to be.

Merge your multiple data sources into a single collection.

As a data analyst, you’re probably working with a lot of different tables and sources. Suppose you want to collect and structure that data in a way that you can use it for your analysis. With the TENGU platform, you can easily collect your data in one of the supported databases or S3 bucket.

(Pictured: a more complex pipeline extracting data from multiple Google Sheet elements into an ArangoDB database, combining different tabs of data with various pipeline definition elements.)

Suppose you want to include a transformation element in your pipelines. In that case, you can do this in the TENGU platform with our last Kubernetes and JupyterHub related update. This way, you can write scripts that turn your data collection pipeline into a transformation pipeline.

Now that you’ve got your data pipelines set up, all that’s left is to let them run with the desired frequency of synchronisation.

Synchronisation of data pipelines

When you start your day, you want your data to be updated and ready for analysis. However, when you continuously need real-time data, you'll want to synchronise the data you're working with right away. You want to be able to do both, and ideally from within the same program.

To perform a pipeline run, it simply takes a button press within the element on the TENGU platform (in the ‘Actions’ tab). This way, your collections are easily synchronised and up to date with your latest data sources. These runs are also logged in the ‘Runs’ tab of the pipeline element, as you can see below:

Of course, you won’t have to execute these runs manually every time you want synchronised data. We’ve included a way to schedule recurring pipeline runs at the most reasonable time for you. So you can start every day with data already synchronised.

Monitor with directional flow, alerts & notifications

One of the biggest enemies of a data analyst is bad data - inaccurate data for your business. When you’ve been delivering reports with incomplete data, you face lost value - both in your data collection and possible lost revenue due to a botched insight.

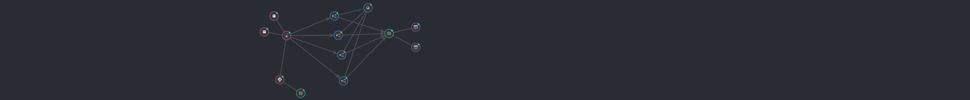

Want to know if everything is connected properly? We’ve tuned the graph view so it’s easier to monitor your data environment. For example, we’ve made it possible to see directional relations between elements, such as a data flow, so you can more quickly immerse yourself in the graph. You can also see at a glance whether it has encountered any errors, indicated by a coloured dot on the element in the graph overview.

💡 Element colour codes: real-time status of the resource

: No issues

: Simple connection issue

: Multiple connection issues

Want more info on these status conditions in the TENGU platform?

You can find out exactly which status failed in the ‘Code’ tab of the element.

(Pictured: The System status and notifications side nav that shows all changes and updates)

As you can see above, we’ve also improved the system status and notifications tab to log more activity in the TENGU platform. The logging includes information about events, the related element, the user performing the action, and accurate timestamps.

To ease monitoring in general, we’ve upgraded the filters with the option to save your filters as well as a couple of quality of life changes to better the search functionality. So you can focus on what you need right away.

With all these changes, we want to enable you to monitor your data environment quickly, thanks to the graph view. With the system status and notifications tab, you’re caught up immediately with the latest changes and ready to collaborate with your team more efficiently.

So, what's next?

That’s it, for now! However, we’re far from done with our plans for the TENGU platform, and we can already share with you what you can expect next.

We'll be adding more supported integrations, and we are working on adding Superset, a tool for creating dashboards. This visualisation tool will seamlessly integrate with the current E(T)L pipelines for data analysts.

We hope you like these new features and supported integrations. But, are we missing an important one?

Let us know what you want to see supported in the TENGU platform!

And on top of common technologies and resources, we can always add customised integrations for you and your company.

Want to see the platform in action?